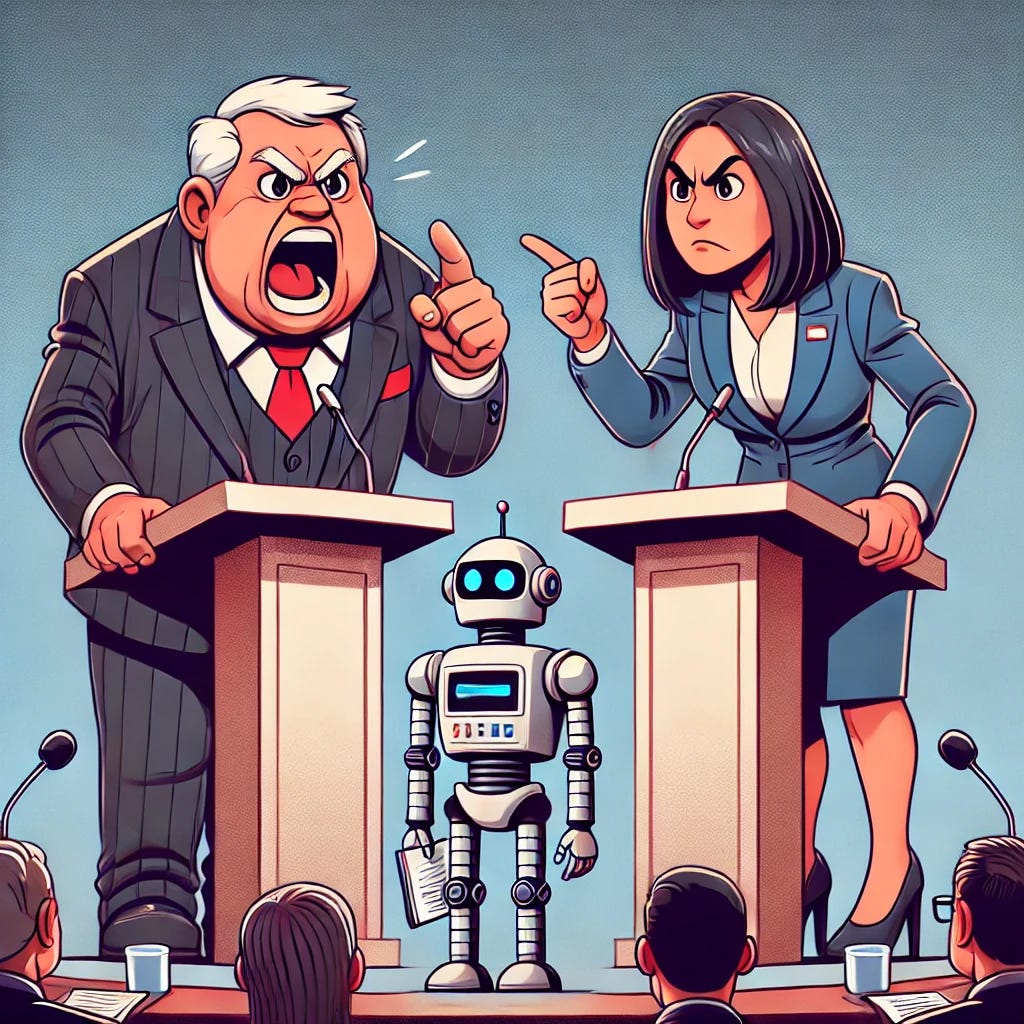

AI should live-fact check presidential debates

And other proposals for making debates more about policy and less about personality.

OK, the Trump-Harris debate wasn’t as awful as the Trump-Biden debate in June, let alone the shouting match that was the first Trump-Biden debate in September 2020. The moderators did step in to fact-check a handful of Trump’s falsehoods (though none of Harris’s). But only absurd ones, like his claims that he won the 2020 election and that immigrants are eating people’s pets. Dozens of others from both candidates slipped through. So here’s an idea: could you use AI to provide fact-checks in real-time?

The TL;DR: Yes. And there’s a whole lot of other things you could do to make presidential debates more useful to voters, while still being good television.

The New York Times’s fact-checks of the debate listed more than 40 lies, exaggerations, and misleading statements from the two candidates. I took a handful of those claims and ran them through the paid versions of both chatGPT and Perplexity (click on those links to see their answers). Both provided instant responses that closely matched those of the Times fact-checkers but were much more detailed. Some highlights:

Harris: “60 times [Trump] tried to get rid of the Affordable Care Act.” The NYT noted that Trump “urg[ed] Republicans in Congress in 2017 to pass several bills to repeal and replace major portions of” the ACA. ChatGPT named some of these bills, noting that one—the American Health Care Act—did pass in the House. Perplexity named that bill too. All three sources noted that there had been numerous attempts to repeal Obamacare before Trump, but far fewer than 60 on his watch.

Harris: “We’ve invested $1 trillion in the clean energy economy.” Harris’s statement implied, to me, that the US government itself had invested that much. But the NYT said the US government’s incentives had spurred $700 billion in private investments, citing the Clean Investment Monitor. The two AIs interpreted Harris more correctly, in my view, and listed the true amounts of direct federal spending at some $400-$500 billion. Perplexity also noted $322 billion in private investment, sourcing that to a NYT article from May. That piece too cited the Clean Investment Monitor, but appears to have got the figure wrong. In short, I think the NYT came off worse here.

Trump: Inflation under Biden reached 21%, “the worst in our nation’s history.” All three sources noted that inflation peaked at 9.1% in 2022 (the chatbots also gave the month: June). Perplexity said the highest inflation since the advent of the consumer price index was 20.49% in 1917, while chatGPT said it was 23.7% in 1920. There are reputable sources for both figures, and at least one other credible source with different figures again.

Trump: After Roe v. Wade was passed, “Every legal scholar… wanted” abortion policy “to be brought back to the states.” Both chatbots noted that scholarship was divided on the issue. As I did this test a couple of days after the debate, Perplexity even gave me a couple of scholars interviewed in the aftermath who said Trump’s claim was obviously false.

Harris: Trump presided over “one of the highest [trade deficits] we’ve ever seen in the history of America.” Both AIs rated the claim as largely true, while the NYT called it “misleading.” It’s certainly disingenuous, given that the trade deficit got even bigger under Biden. Score one for the Times.

My takeaway: it’s an even match between the AI and human fact-checkers, with both getting one or two things wrong. By contrast, though, no human debate moderator could be expected to marshal all those facts in real time. And as good as the NYT fact-checkers are, they’re still several minutes behind the candidates, and their reporting only appears on the NYT.

So, my modest proposal: what if the TV network assigned a couple of fact-checkers to run every one of the candidates’ factual claims through a chatbot, and provide its answers to the moderators on a screen? After each candidate had finished an answer, the moderators would read off a list of their lies and exaggerations, or else snippets could be posted directly in the chyrons in real time. That would both be fairer—nobody could then claim it was a “3-on-1 debate” as Trump surrogates did—and would quickly show the public just how much their politicians are deceiving them. It might even incentivize the candidates to lie less.

For an encore, I asked both Perplexity and chatGPT to tell me what other reforms have been proposed to make debates less about personal attacks and more about policy. Read their answers here and here. The many ideas include sourcing questions from the public; focusing segments of the debate on specific policy areas or even having separate debates for them; using subject experts as moderators for each policy area; allotting more time to key issues instead of the same time to all; asking candidates how they’d respond to real-world scenarios, and—yes—live fact checks after each segment (though not by AI!)

In short, there are many easy fixes for making debates serve the public better. What’s standing in the way, I wonder: the networks, or the candidates’ campaigns?

Links

An interview with the mastermind behind Project 2025. Undercover journalists secretly filmed Russ Vought talking about “destroying [federal agencies’] notion of independence”, organizing “the largest deportation in history,” and keeping the transition plans unobtainable under the the Freedom of Information Act. The video is wild. (Center for Climate Reporting)

What’s happened to the Field Fellowship? This 12-month program, led by former New Zealand prime minister Jacinda Ardern, was announced in June by the Center for American Progress. It was designed for “emerging leaders” to “challenge and change the status quo of global politics.” The fellowship website promised an update in July, but none was forthcoming and now the site is down. CAP hasn’t responded to my request for comment. (Center for American Progress)

Futures thinking for governments. An account of getting public servants to imagine “ridiculous-at-first futures” like a moneyless government or universal basic income. Mainly interesting for the links on things like “kindness in public policy” and “better government through DAO.” (Center for Public Impact.)

To transform communities, ask them how. While the British government was prescribing solutions for combating inequality, a six-month exercise in consulting with a local community produced several projects better suited to it than the government’s top-down approaches. (Collective Imagination Practice Community)

Why is Texas beating California on renewable energy? Because the oil capital of the US is best understood as the energy capital in general, and its light regulation and self-contained grid make it a better place for innovation. (Good On Paper podcast)

Great post. Have been mulling something similar - with the addition of an extending Pinocchio nose added to the candidates as they speak and the non-facts accumulate

Remember that the training data for the LLMs will include text from political speeches, propaganda, etc.

Using a LLM like this to do factchecking is absolutely not the way to do it. LLMs are not a database of facts, rather reasoning and generating engines trained on consensus, not truth.

So, what we'd do to fact check with AI is use the reasoning power of an LLM to compare what was said with a trusted source of truth (not the LLM's training data) with a technique such as RAG. That would be simple to build.

However, the question then becomes, "What's the source of truth?"

Still, this would be the way to do it, not just use ChatGPT or Perplexity. And also easily implementable.